Arxiv repository hosts 1.5m academic papers, and users add over 10K papers per month. Keeping track of trends is challenging as it is limited to keyword search, and does not let users track and explore content based on themes that capture relationships beyond shared words, or recommend content from this perspective. This challenged us to use unsupervised learning to learn topics to summarise documents, and let users explore and find research papers on similar topics.

Introduction

In this article, we will cover a brief introduction to Reinforcement Learning and will learn about how to train a Deep Q-Network(DQN) agent to solve the “Lunar Lander” Environment in OpenAI gym. We will use Google’s Deepmind and Reinforcement Learning Implementation for this.

In this article, we will work with decision trees to perform binary classification according to some decision boundary. We will first build and train decision trees capable of solving useful classification problems and then we will effectively train them and finally will test their performance.

The code used in this article and the complete working example can be found the git repository below:

In Naboo Planet the R2-D2 droid is serving her Queen Amidala and has received some important documents of Dark Lord Darth Vadar.

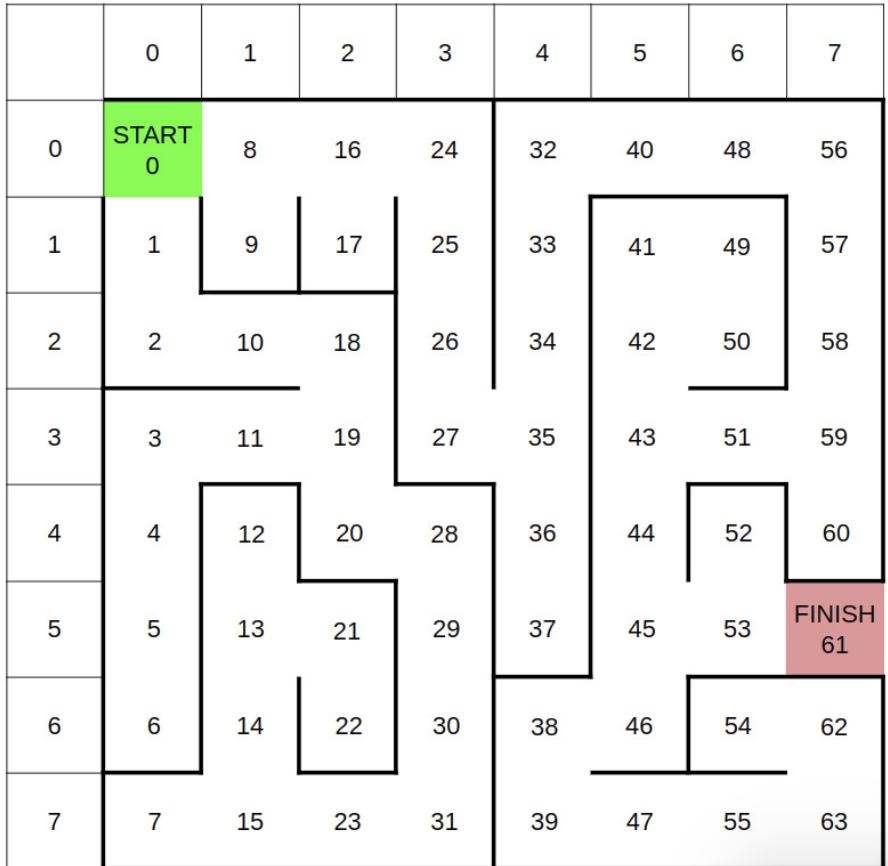

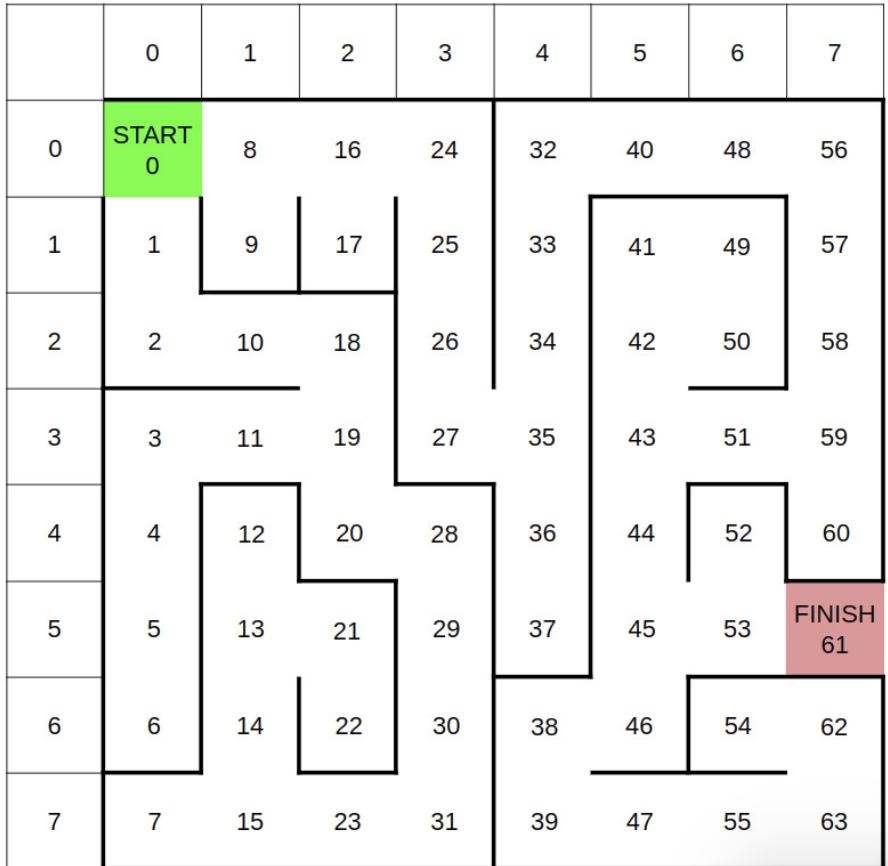

As soon as Dark Lord finds this out, he sends his army after R2D2 to recover the documents from him. Fearing the Darth’s Army, R2D2 hides in a Cave. While entering the cave R2D2 has found a map of the cave and It knows that it is at grid location 0 and needs to reach grid 61 to go out of the Cave.

Darth’s Army has got to know that R2D2 is hiding in the cave and set up the explosives in the cave that will go off after a certain time.

Let us use our knowledge of AI and help R2D2 to search his path out of the Cave.

R2D2 will follow the following rules for Searching the cave(which are hardcoded in his memory)

- The (x, y) coordinates of each node are defined by the column and the row shown at the top and left of the maze, respectively. For example, node 13 has (x, y) coordinates (1, 5).

- Process neighbours in increasing order. For example, if processing the neighbours of node 13, first process 12, then 14, then 21.

- Use a priority queue for your frontier. Similar to Assignment 2, add tuples of (priority, node) to the frontier. For example, when performing UCS and processing node 13, add (15, 12) to the frontier, then (15, 14), then (15, 21), where 15 is the distance (or cost) to each node. When performing A*, use the cost plus the heuristic distance as the priority.

- When removing nodes from the frontier (or popping off the queue), break ties by taking the node that comes first lexicographically. For example, if deciding between (15, 12), (15, 14) and (15, 21) from above, choose (15, 12) first (because 12 < 14 < 21).

- A node is considered visited when it is removed from the frontier (or popped off the queue).

- You can only move horizontally and vertically (not diagonally).

- It takes 1 minute to explore a single node. The time to escape the maze will be the sum of all nodes explored, not just the length of the final path.

- All edges have cost 1.

If R2D2 uses a Uniform Cost Search, how long will it take him to escape the Cave?

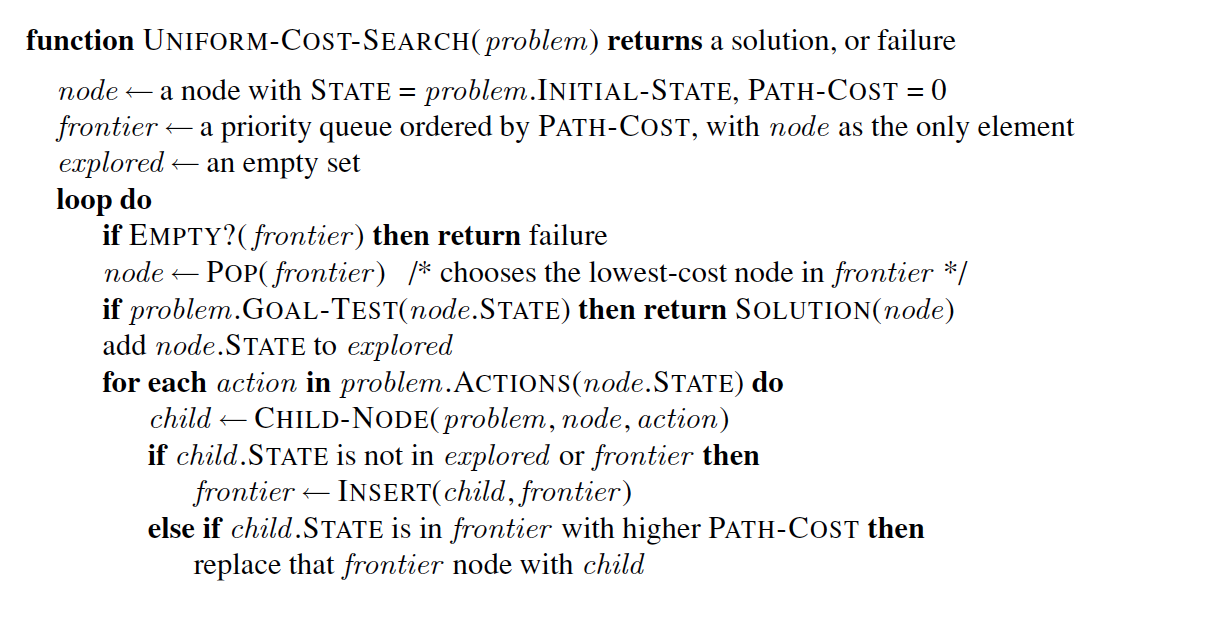

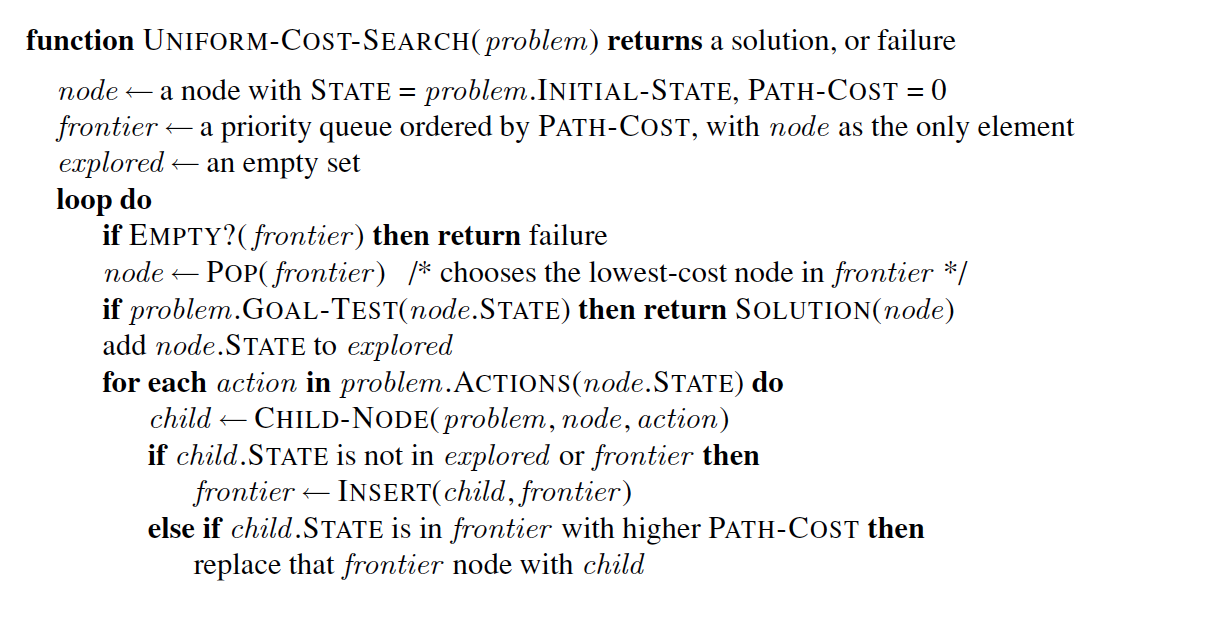

Below is the Pseudo code of Uniform Cost Search